Building data pipelines can be a right complicated and time-consuming task, you know? The data comes in all sorts of formats, is stored in different systems, and can be riddled with quality issues, not to mention it can be inconsistent as well. And to make matters worse, you gotta use a whole range of tools and technologies to query and transform the data. That includes everything from data replication tools, ETL/ELT, libraries, APIs management, orchestration, and transformation tools. Let’s explore the solutions: Snowflake Snowpipe Streaming and Dynamic Tables! ❄

The Challenge

Building data pipelines can be a right complicated and time-consuming task, you know? The data comes in all sorts of formats, stored in different systems, and can be riddled with quality issues, not to mention it can be inconsistent as well. And to make matters worse, you gotta use a whole range of tools and technologies to query and transform the data. That includes everything from data replication tools, ETL/ELT, libraries, APIs management, orchestration, and transformation tools.

To make life easier, the big trend in the market is shifting towards automation and simplification, you see? For instance, these days, folks are all about leveraging data replication tools to automate the hard work of extracting data from the source systems and landing it into their enterprise data lake. It's all about working smart!

Now, the next big challenge in building these data pipelines is data transformation. Traditionally, the ETL/ELT tools were complicated and needed a lot of manual effort. Yeah, sure, they were better than doing custom-coded solutions, but they weren't perfect, especially when dealing with non-relational data sources, you know? But recently, there’s a solution in the form of declarative pipelines.

With declarative pipelines, you define your pipeline using code, which means the tools can take care of a lot of the manual grunt work. This frees up data engineers from messing around with creating and managing database objects and managing DML code, so they can focus more on the business logic and adding business value.

Another ripper benefit of declarative pipelines is that they let you handle batch and streaming pipelines the same way. In the past, the tools for batch and streaming pipelines were different, so you had to set up separate infrastructures for both, which was a pain in the rear when you wanted to handle both batch data and low-latency streaming data for real-time use cases.

Collaboration with Snowflake

Our Chief Technology Officer, Adam Morton, had the pleasure to sit down with Alex Lei, a top-notch Senior Sales Engineer at Snowflake. Alex and Adam have worked on a few projects before, and Alex is a real expert, but he's still got that humble attitude. Alex discussed around new features which Snowflake offers.

What's Snowpipe Streaming all about?

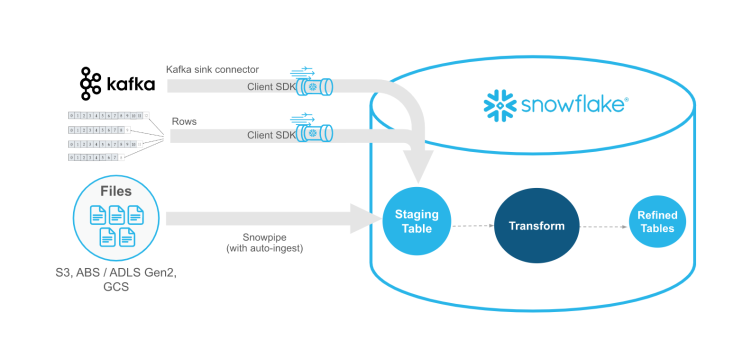

Snowpipe Streaming is the way to go when you want those low-latency loads of streaming data rows using the API. Instead of loading data from staged files like in bulk data loads or Snowpipe, the streaming ingest API writes data rows directly to Snowflake tables. This means quicker load times and lower costs for dealing with large volumes of data, making it a cracking tool for handling real-time data streams.

Snowpipe vs Snowpipe Streaming

Now, let's not get it mixed up, Snowpipe Streaming ain't here to replace the good ol' Snowpipe. It's here to work alongside it, see? You can use Snowpipe Streaming when you're dealing with data streaming in rows from Apache Kafka topics or similar sources, and it saves you the hassle of creating files to load data into Snowflake tables. The real beauty of it is that you get automatic, continuous loading of data streams into Snowflake as soon as the data becomes available.

And here's a real ripper thing: Snowpipe Streaming works on a serverless compute model, which means you don't have to worry about managing virtual warehouses. Snowflake takes care of the compute resources, and it'll automatically adjust the capacity based on the current Snowpipe Streaming load. Accounts are charged based on the compute for your Snowpipe Streaming migration compute costs and per-second client ingestion time.

So, you can just leave out the stage management (internal or external) and virtual warehouse stuff, making the configuration and maintenance a lot easier!

What's the deal with Dynamic Tables?

Dynamic Tables are the bee's knees! They're a new table type in Snowflake that lets you use simple SQL statements to define the result of your data pipelines. And the best part is that these tables periodically refresh as the data changes, only working on the new changes since the last refresh. Snowflake handles all the scheduling and orchestration, so you don't need any third-party tools, making life less complicated.

They announced this nifty feature at the Snowflake Summit in 2022, but back then, they called it materialised tables, but they've sorted it out and now we call 'em dynamic tables!

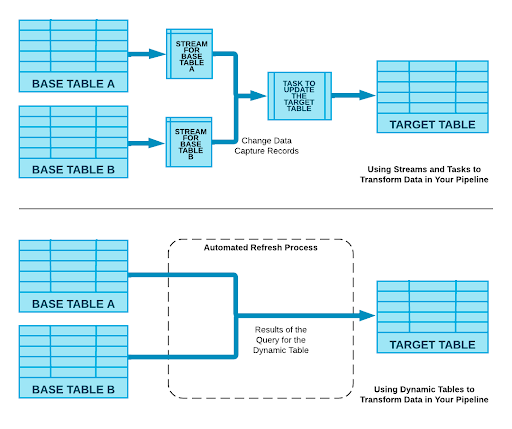

Prior to this feature engineers using Snowflake's native capabilities would have had to leverage streams and tasks as well as managing the database objects to achieve the same results. But with Dynamic Tables, it's a whole lot simpler! You can see in the diagram how much easier it is compared to the old way:

A much simpler way to transform data without having to manage Streams and Tasks.

So, how do Dynamic Tables work, you ask?

Well, you define your data transformations using SQL statements, and the results are automatically materialised and refreshed as the input data changes. It's like you define your target table within the SQL logic you provide, just like those dbt models, you know?

With Dynamic Tables, you get incremental refreshes, which means better performance and lower costs compared to the old-school data pipelines. And the best part is, you can define multiple dynamic tables and chain 'em together to create a DAG pipeline with hundreds of tables!

Here's how you would define a dynamic table:

CREATE [ OR REPLACE ] DYNAMIC TABLE

LAG = ' { seconds | minutes | hours | days }'

WAREHOUSE =

AS SELECT

Putting it all together!

In this demo, Alex generates some IoT-style device data and puts it on a Kafka topic. Then, he uses a combo of Snowpipe streaming to ingest the data as rows directly into a table in Snowflake in its native JSON format. After that, he specifies a dynamic table to join this semi-structured data with a 'normal' relational table with a refresh rate of 1 minute to get the result declaratively.

We hope you find this helpful! Follow us and subscribe to our newsletter to learn more about Snowflake ❄

These Posts are Suggested For You